When "Trust in Science" Leads to a Susceptibility to Pseudoscience

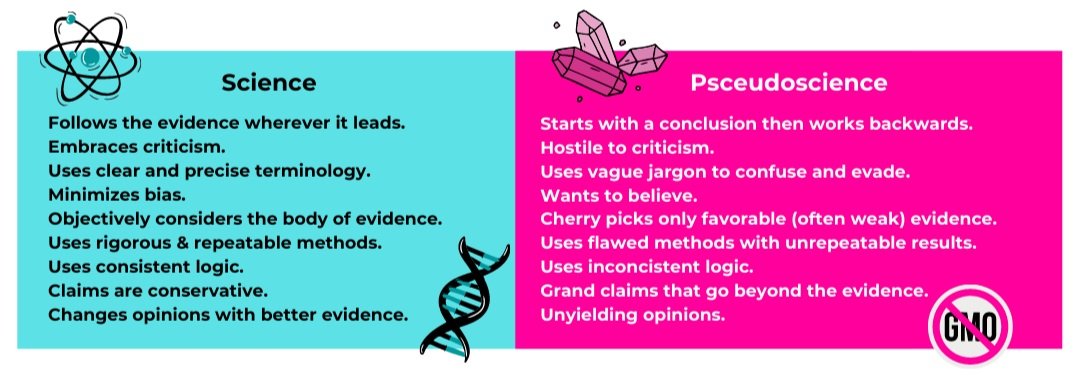

Trust in science ironically may increase vulnerability to pseudoscience, a finding that conflicts with campaigns that promote broad trust in science as an antidote to misinformation. I suppose something for all scicommers to think about when communicating science. Factoids are great. But our focus should also include strategies to promote science literacy and critical thinking.

In a study published a few months ago in the Journal of Experimental Social Psychology, trust in science was found to make people vulnerable to pseudoscience (O’Brien et al, 2021). They had two key findings:

1) people who trust science are more likely to believe misinformation if there are scientific references (rather than misinformation without) and

2) reminding people of the need for critical evaluation lowers the chances of believing this misinformation, whereas reminders to “trust science” does not.

To arm people with the ability to not fall into pseudoscience, trust has to be paired with science literacy and critical thinking.

The last two years have highlighted how the impact of science hinges on public trust. However, broad trust can cause issues - and I gotta say, this is something that I see constantly on social media when it comes to pseudoscience. It can be hard to tell the difference when it’s coming from credentialed people or seemingly scientific organizations, especially when it’s coming from fields of expertise that aren’t your own. This post dives into some of my thoughts on the topic. What do you think?

Seemingly scientific organizations aren’t always so scientific: EWG as an example

To the average person, info from the EWG seems credible ("look at all their references. They have scientists that work for them" etc). Alas, when you actually go through their references, you quickly spot a troubling trend. Their sources are blatantly cherry-picked, often misrepresented, to meet their agenda (a red flag for pseudoscience). Here's one e.g. with their methylparaben entry in the skindeep database.

For more on the EWG, check out our post on the topic from a few years back: A Case Against the EWG. The market traction they have gotten, especially from larger brands who should know better, is truly disappointing and disheartening. Fear sells.

Just because they’re a scientist or medical professional doesn’t mean they’re not spreading pseudoscience and misinformation.

Just because they're experts doesn't mean they're right, it doesn't mean they have expertise in the subject they're talking about, and it doesn't mean they're not employing shady practices (e.g. not disclosing sponsorship, something I've been seeing more and more with Insta Famous expert accounts). Also, questionable 'authorities' often truly believe the misinformation they spread.

This makes things SO HARD for the average person. "Doing your own research" in fields that aren't your own, especially in light of the amount of info out there + our limited time, is problematic... At some point, we have to trust reputable relevant experts. But how do we know which experts are trustworthy?

Trust in science has to be paired with science literacy & critical thinking.

Science literacy is not about one’s ability to regurgitate facts, but rather, to understand the scientific process and reason scientifically.

Crucially important - not every study is as good as the next. Science is a human enterprise. Predatory journals are a thing, publication bias persists,

p-value hacking happens, and so on. This is why looking at the *body of scientific evidence* is so important. I see this as a point of confusion A LOT on this platform... Critically reading 1 study generally involves reading a whole bunch of studies - are the results an outlier from the evidence out there? Also, In real-life research, little disagreements are par for the course - and it's rarely a big deal. It just becomes more of a "let's run some experiments and wait to see who's right" kinda thing.

Critical thinking involves being critical of your own thinking. We all want to view ourselves as rational beings, but that’s the greatest self-deception of all. Cognitive biases are the result of your brain’s attempt to more easily make sense of the complicated world around you. Your mind tends to take the path of least resistance, unless you make a conscious, higher energy effort, to step outside what comes naturally. Being aware of your biases, considering how they may influence your thinking, challenging them, and thinking about what factors you may have missed, is crucial. While we all have some inherent sense of logic, overwhelmingly we are emotional animals. We have the capacity for logic, but logic and critical thinking are trained skills that need to be developed and practiced. The worst cognitive bias is the one you’re not aware of.

“When most of us “research”, we formulate an initial opinion the first time we hear about something, we evaluate everything through the lens of our gut feeling, and we selectively accept information that confirms our preconceptions. If you “do your research”, no doubt you can find websites and even medical professionals that hold your opinion. However, don’t fool yourself. You aren’t doing research. Scientific consensus among legitimate and relevant experts, and the body of evidence, is remarkably valuable. But only if we listen to it. This means you need to be humble, and admit to yourself when you lack the necessary skills to evaluate the science in front of you. And to be open-minded to understand that your preconceptions may be wrong.”

Sources

O'Brien, T. C., Palmer, R., & Albarracin, D. (2021). Misplaced trust: When trust in science fosters belief in pseudoscience and the benefits of critical evaluation. Journal of Experimental Social Psychology, 96, 104184.

Piejka, A., & Okruszek, Ł. (2020). Do you believe what you have been told? Morality and scientific literacy as predictors of pseudoscience susceptibility. Applied Cognitive Psychology, 34(5), 1072-1082.